Difference between revisions of "PVE LXC Containers"

| (18 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

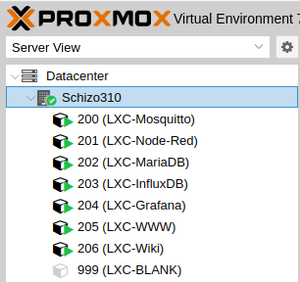

[[File:Successful-Containers.png{{!}}300px{{!}}right{{!}}thumb]] | [[File:Successful-Containers.png{{!}}300px{{!}}right{{!}}thumb]] | ||

So far, LXC containers are just like regular Linux (for the most part...) & the same procedures apply when building. | So far, LXC containers are just like regular Linux (for the most part...) & the same procedures apply when building. | ||

In fact, I have created a large set of containers on my testbed server simply by following the [[Server Building{{!}}Server Building]] outlines here on this site. | In fact, I have created a large set of containers on my testbed server simply by following the [[Server Building{{!}}Server Building]] outlines here on this site. | ||

''Heck... These days, I tend to default to Debian LXC containers unless there's a VERY good reason to do otherwise...'' | |||

= Building a Container = | = Building a Container = | ||

== Templates == | == Templates == | ||

| Line 34: | Line 35: | ||

=== CLI method === | === CLI method === | ||

* <code>pct create '''VMID''' '''TEMPLATE''' --hostname '''HOSTNAME''' --password '''PASSWORD''' --rootfs '''LOCATION''':'''SIZE''' --cores '''CORES''' --memory '''RAM''' --swap '''SWAP''' --unprivileged '''<1{{!}}0>''' --features '''nesting=<1{{!}}0>''' --net0 name='''eth0''',bridge='''BRIDGE''',firewall='''<1{{!}}0>''',ip=''' | * <code>pct create '''VMID''' '''TEMPLATE''' --hostname '''HOSTNAME''' --password '''PASSWORD''' --rootfs '''LOCATION''':'''SIZE''' --cores '''CORES''' --memory '''RAM''' --swap '''SWAP''' --unprivileged '''<1{{!}}0>''' --features '''nesting=<1{{!}}0>''' --net0 name='''eth0''',bridge='''BRIDGE''',firewall='''<1{{!}}0>''',ip='''<(IPv4/CIDR{{!}}dhcp{{!}}manual)>'''</code> | ||

* EXAMPLE (same as defaults in UI, but DHCP for IPv4 & unique identifiers...): | * EXAMPLE (same as defaults in UI, but DHCP for IPv4 & unique identifiers...): | ||

** <code>pct create '''666''' '''local:vztmpl/debian-11-standard_11.6-1_amd64.tar.zst''' --hostname '''LXC-test''' --password '''nuggit''' --rootfs '''local-lvm''':'''8''' --cores '''1''' --memory '''512''' --swap '''512''' --unprivileged '''1''' --features '''nesting=1''' --net0 name='''eth0''',bridge='''vmbr0''',firewall='''1''',ip='''dhcp'''</code> | ** <code>pct create '''666''' '''local:vztmpl/debian-11-standard_11.6-1_amd64.tar.zst''' --hostname '''LXC-test''' --password '''nuggit''' --rootfs '''local-lvm''':'''8''' --cores '''1''' --memory '''512''' --swap '''512''' --unprivileged '''1''' --features '''nesting=1''' --net0 name='''eth0''',bridge='''vmbr0''',firewall='''1''',ip='''dhcp'''</code> | ||

| Line 51: | Line 52: | ||

I'd suggest adding a regular user so that you can then SSH into the container. | I'd suggest adding a regular user so that you can then SSH into the container. | ||

I have a set of configurations that I do to pretty much every LXC container I build. Check [[CopyPasta#For_doing_basic_setup_of_an_LXC_(or_pretty_much_any_Linux_VM...):{{!}}this entry]]. | |||

=== Root Access via SSH === | |||

By default a Proxmox LXC container allows root login only with public key authentication. | |||

ATM... To my knowledge, you either have to set up the public key during build of the LXC or mess about grabbing it from your work machine from inside the LXC itself. | |||

To login to a container with username/password login to your Proxmox host and attach to the container with the following command. | |||

* <code>lxc-attach --name '''VMID'''</code> | |||

(Where VMID is the number of the container) | |||

Edit '''sshd_config''' | |||

* <code>vi /etc/ssh/sshd_config</code> | |||

and change the line '''<code>#PermitRootLogin without-password</code>''' to '''<code>PermitRootLogin yes</code>''' | |||

Restart ssh service for the changes to take effect. | |||

* <code>service ssh restart</code> | |||

(In theory, you should be able to [[HowTo -_ssh#Make_it_easier_to_connect{{!}}set up the public key normally]] now & then revert the ssh_config change.) | |||

== Further Tricks == | |||

One useful trick is to add storage to the LXC that's not managed by PVE directly. (For instance, I'm building an LXC for use in backing up & reconfiguring local machines & VMs. I'd like to use a dedicated drive in a hot-swap bay on my server.) | |||

Apparently, either [https://pve.proxmox.com/wiki/Linux_Container#_bind_mount_points "Bind Mount Points"] or [https://pve.proxmox.com/wiki/Linux_Container#_device_mount_points "Device Mount Points"] are the solution... | |||

[https://forum.proxmox.com/threads/mount-external-hdd-in-lxc-container.50897/ This thread] and [https://forum.proxmox.com/threads/container-with-physical-disk.42280/ this one] have some helpful discussion... | |||

= Pre-Built Containers = | = Pre-Built Containers = | ||

| Line 56: | Line 86: | ||

= Stumbling Blocks & Oddities = | = Stumbling Blocks & Oddities = | ||

== Time Zone Settings == | |||

Apparently, LXCs default to UTC even if your server is set to your local timezone. | |||

Fortunately, it's not difficult to correct this. | |||

* <code>sudo dpkg-reconfigure tzdata</code> | |||

or | |||

* pick another method from [https://itslinuxguide.com/debian-set-timezone/ this web page]. | |||

== DHCP / DNS Difficulties == | == DHCP / DNS Difficulties == | ||

For some reason, If I set up a container with both IPv4 & IPv6 using '''DHCP''', resolved will <span style="text-decoration: underline;" >ONLY use IPv6</span>. This is kind of an issue if I want to use IPv4 DNS... | For some reason, If I set up a container with both IPv4 & IPv6 using '''DHCP''', resolved will <span style="text-decoration: underline;">ONLY use IPv6</span>. This is kind of an issue if I want to use IPv4 DNS... | ||

Fortunately, simply setting IPv6 to '''Static''' fixes the issue. | Fortunately, simply setting IPv6 to '''Static''' fixes the issue. | ||

| Line 64: | Line 103: | ||

LXC containers seem to default to '''xterm.js''' rather than the '''noVNC''' console standard with regular VMs. | LXC containers seem to default to '''xterm.js''' rather than the '''noVNC''' console standard with regular VMs. | ||

This isn't a bad thing... | This isn't a bad thing... Just surprised me. | ||

== Standard LXC containers built upon Debian seem to block non-root users from using ping... == | == Standard LXC containers built upon Debian seem to block non-root users from using ping... == | ||

| Line 74: | Line 113: | ||

* <code>sudo chmod u+s /usr/bin/ping</code> | * <code>sudo chmod u+s /usr/bin/ping</code> | ||

== LXC containers built on Debian don't seem to get usbutils == | |||

* <code>sudo apt install usbutils</code> | |||

= Container Building Tips = | = Container Building Tips = | ||

== Converting unpriveledged container to priveledged == | |||

# Backup the container | |||

# shutdown the container | |||

# restore from the backup and select "Priveledged" in the dialog. | |||

== Adding NFS to an LXC == | |||

'''MUST BE A PRIVELEDGED CONTAINER''' | |||

in: Options / Features<br>enable '''Nesting''' and '''NFS''' | |||

Then go ahead & [[Setting up NFS for file sharing{{!}}set up NFS as normal]]. | |||

== Using a VPN (OpenVPN or TailScale) on an LXC == | |||

LXCs do not give access to a '''/dev/tun''' device & this is needed for Tailscale to work. | |||

If you're running the LXC on Proxmox, You can add this feature by editing the containers configuration file. | |||

The following instructions are copied (and/or adapted) from [https://tailscale.com/kb/1130/lxc-unprivileged this page]. | |||

For example, using Proxmox to host an LXC with ID 112, the following lines would be added to /etc/pve/lxc/112.conf: | |||

* <code>vi /etc/pve/lxc/112.conf</code> | |||

lxc.cgroup2.devices.allow: c 10:200 rwm | |||

lxc.mount.entry: /dev/net/tun dev/net/tun none bind,create=file | |||

If the LXC is already running it will need to be shut down and started again for this change to take effect. | |||

* <code>pct reboot 112</code> | |||

= PVE CLI Tools for LXC work... = | = PVE CLI Tools for LXC work... = | ||

* pct (Proxmox Container Toolkit) | * pct (Proxmox Container Toolkit) | ||

* pveam (Proxmox VE Appliance Manager) | * pveam (Proxmox VE Appliance Manager) | ||

Latest revision as of 23:18, 30 September 2025

So far, LXC containers are just like regular Linux (for the most part...) & the same procedures apply when building.

In fact, I have created a large set of containers on my testbed server simply by following the Server Building outlines here on this site.

Heck... These days, I tend to default to Debian LXC containers unless there's a VERY good reason to do otherwise...

Building a Container

Templates

LXC Containers start with a template.

For our example here, we're going to start with a basic Debian 11 template.

This means we need to ensure we have the template on the PVE host.

- Sign into the PVE UI & select your Local datastore

- Select the CT Templates storage

- Click the Templates button

- Select a template package (we're going with debian-11-standard) then hit the Download button

Creating the Container

Web UI method

Now that you have a template to start from, you can hit the Create CT button.

For now, we'll create a very basic container. I've found that most of the defaults are fine for single service applications. The only resource I've found a need to increase so far is CPU cores. Memory can be increased later if needed (as can CPU cores) and actual data storage should be handled outside of the boot disk anyhow.

- 1st screen: choose an ID# and a hostname for the container. Then enter the password for root on this container (twice...). Then hit Next

- 2nd screen: select the template we downloaded above. Then hit Next

- 3rd screen: choose a storage location & boot disk size. Then hit Next

- 4th screen: how many CPU cores you want available to the container. Then hit Next

- 5th screen: how much memory you want available to the container. Then hit Next

- 6th screen: set up networking (you'll note it defaults to static addressing... silly...) Then hit Next

- 7th screen: set up DNS (Why in heck this isn't considered part of networking...) Then hit Next

- 8th (final) screen: Look things over to make sure they're the way you want them. Possibly check the Start after created box. Then hit Finish

CLI method

pct create VMID TEMPLATE --hostname HOSTNAME --password PASSWORD --rootfs LOCATION:SIZE --cores CORES --memory RAM --swap SWAP --unprivileged <1|0> --features nesting=<1|0> --net0 name=eth0,bridge=BRIDGE,firewall=<1|0>,ip=<(IPv4/CIDR|dhcp|manual)>- EXAMPLE (same as defaults in UI, but DHCP for IPv4 & unique identifiers...):

pct create 666 local:vztmpl/debian-11-standard_11.6-1_amd64.tar.zst --hostname LXC-test --password nuggit --rootfs local-lvm:8 --cores 1 --memory 512 --swap 512 --unprivileged 1 --features nesting=1 --net0 name=eth0,bridge=vmbr0,firewall=1,ip=dhcp

- Many more options to be found in the man page for

pct

Configuring & Using the Container

Congratulations!

You have an LXC container.

The only user account currently configured is root.

There will be minor oddities, but basically, it's a lightweight VM.

At this point,, for all intents and purposes, it works just like a normal Linux VM. You can sign in at its console and set it up like you would a VM.

I'd suggest adding a regular user so that you can then SSH into the container.

I have a set of configurations that I do to pretty much every LXC container I build. Check this entry.

Root Access via SSH

By default a Proxmox LXC container allows root login only with public key authentication.

ATM... To my knowledge, you either have to set up the public key during build of the LXC or mess about grabbing it from your work machine from inside the LXC itself.

To login to a container with username/password login to your Proxmox host and attach to the container with the following command.

lxc-attach --name VMID

(Where VMID is the number of the container)

Edit sshd_config

vi /etc/ssh/sshd_config

and change the line #PermitRootLogin without-password to PermitRootLogin yes

Restart ssh service for the changes to take effect.

service ssh restart

(In theory, you should be able to set up the public key normally now & then revert the ssh_config change.)

Further Tricks

One useful trick is to add storage to the LXC that's not managed by PVE directly. (For instance, I'm building an LXC for use in backing up & reconfiguring local machines & VMs. I'd like to use a dedicated drive in a hot-swap bay on my server.)

Apparently, either "Bind Mount Points" or "Device Mount Points" are the solution...

This thread and this one have some helpful discussion...

Pre-Built Containers

PVE has a bunch of templates available by a company called Turnkey Linux. I've tried a couple... Not overly impressed so far, but YMMV.

Stumbling Blocks & Oddities

Time Zone Settings

Apparently, LXCs default to UTC even if your server is set to your local timezone.

Fortunately, it's not difficult to correct this.

sudo dpkg-reconfigure tzdata

or

- pick another method from this web page.

DHCP / DNS Difficulties

For some reason, If I set up a container with both IPv4 & IPv6 using DHCP, resolved will ONLY use IPv6. This is kind of an issue if I want to use IPv4 DNS...

Fortunately, simply setting IPv6 to Static fixes the issue.

Console connection from the PVE UI

LXC containers seem to default to xterm.js rather than the noVNC console standard with regular VMs.

This isn't a bad thing... Just surprised me.

Standard LXC containers built upon Debian seem to block non-root users from using ping...

ping: socket: Operation not permitted

Solution #3 seems most appropriate...

sudo chmod u+s /usr/bin/ping

LXC containers built on Debian don't seem to get usbutils

sudo apt install usbutils

Container Building Tips

Converting unpriveledged container to priveledged

- Backup the container

- shutdown the container

- restore from the backup and select "Priveledged" in the dialog.

Adding NFS to an LXC

MUST BE A PRIVELEDGED CONTAINER

in: Options / Features

enable Nesting and NFS

Then go ahead & set up NFS as normal.

Using a VPN (OpenVPN or TailScale) on an LXC

LXCs do not give access to a /dev/tun device & this is needed for Tailscale to work.

If you're running the LXC on Proxmox, You can add this feature by editing the containers configuration file.

The following instructions are copied (and/or adapted) from this page.

For example, using Proxmox to host an LXC with ID 112, the following lines would be added to /etc/pve/lxc/112.conf:

vi /etc/pve/lxc/112.conf

lxc.cgroup2.devices.allow: c 10:200 rwm lxc.mount.entry: /dev/net/tun dev/net/tun none bind,create=file

If the LXC is already running it will need to be shut down and started again for this change to take effect.

pct reboot 112

PVE CLI Tools for LXC work...

- pct (Proxmox Container Toolkit)

- pveam (Proxmox VE Appliance Manager)